Systems Approach

PSNet primers are regularly reviewed and updated by the UC Davis PSNet Editorial Team to ensure that they reflect current research and practice in the patient safety field. Last reviewed in 2024.

Background

A 65-year-old woman presented to the outpatient surgery department of one of the most respected hospitals in the United States for a relatively routine procedure, a trigger finger release on her left hand. Instead, the surgeon performs a completely different procedure—a carpal tunnel release. How could this happen?

Medicine has traditionally treated errors as failings on the part of individual providers, reflecting inadequate knowledge or skill. The systems approach, by contrast, takes the view that most errors reflect predictable human failings in the context of poorly designed systems (e.g., expected lapses in human vigilance in the face of long work hours or predictable mistakes on the part of relatively inexperienced personnel faced with cognitively complex situations). Rather than focusing corrective efforts on punishment or remediation, the systems approach seeks to identify situations or factors likely to give rise to human error and change the underlying systems of care in order to reduce the occurrence of errors or minimize their impact on patients.

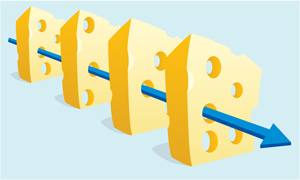

The modern field of systems analysis was pioneered by the British psychologist James Reason, whose analysis of industrial accidents led to fundamental insights about the nature of preventable adverse events. Reason's analysis of errors in fields as diverse as aviation and nuclear power revealed that catastrophic safety failures are almost never caused by isolated errors committed by individuals. Instead, most accidents result from multiple, smaller errors in environments with serious underlying system flaws. Reason introduced the Swiss Cheese model to describe this phenomenon. In this model, errors made by individuals result in disastrous consequences due to flawed systems—the holes in the cheese. This model not only has tremendous explanatory power, it also helps point the way toward solutions—encouraging personnel to try to identify the holes and to both shrink their size and create enough overlap so that they never line up in the future.

Figure. The Swiss Cheese Model of Medical Errors

Another of Reason's key insights, one that sadly remains underemphasized today, is that human error is inevitable, especially in systems as complex as health care. Simply striving for perfection—or punishing individuals who make mistakes—will not appreciably improve safety, as expecting flawless performance from human beings working in complex, high-stress environments is unrealistic. The systems approach holds that efforts to catch human errors before they occur or block them from causing harm will ultimately be more fruitful than ones that seek to somehow create flawless providers.

Reason used the terms active errors and latent errors to distinguish individual from system errors. Active errors almost always involve frontline personnel and occur at the point of contact between a human and some aspect of a larger system (e.g., a human–machine interface). By contrast, latent errors are literally accidents waiting to happen—failures of organization or design that allow the inevitable active errors to cause harm.

The terms sharp end and blunt end correspond to active error and latent error. Personnel at the sharp end may literally be holding a scalpel when the error is committed (e.g., the surgeon who performed the incorrect procedure) or figuratively be administering any kind of treatment. The blunt end refers to the many layers of the health care system not in direct contact with patients, but which influence the personnel and equipment at the sharp end that come into contact with patients. The blunt end thus consists of those who set policy, manage health care institutions, or design medical devices, and other people and forces, which—though removed in time and space from direct patient care—nonetheless affect how care is delivered.

Errors at the sharp end can be further classified into slips and mistakes, based on the cognitive psychology of task-oriented behavior. Attentional behavior is characterized by conscious thought, analysis, and planning, as occurs in active problem solving. Schematic behavior refers to the many activities we perform reflexively, or as if acting on autopilot. In this construct, slips represent failures of schematic behaviors, or lapses in concentration, and occur in the face of competing sensory or emotional distractions, fatigue, or stress. Mistakes, by contrast, reflect incorrect choices, and more often reflect lack of experience, insufficient training, or outright negligence.

The work of James Reason and Dr. Charles Vincent, another pioneer in the field of error analysis, has established a commonly used classification scheme for latent errors that includes causes ranging from institutional factors (e.g., regulatory pressures) to work environmental factors (e.g., staffing issues) and team factors (e.g., safety culture). These are discussed in more detail in the Root Cause Analysis Primer.

In the incorrect surgery case, the active, or sharp end, error was quite literally committed by the surgeon holding the scalpel. As in most cases, the active error is better classified as a slip, despite the complexity of the procedure. The surgeon was distracted by competing patient care needs (an inpatient consultation) and an emotionally taxing incident (a previous patient suffered extreme anxiety immediately postoperatively, requiring him to console her). However, analysis of the incident also revealed many latent, or blunt end, causes. The procedure was the surgeon's last of six scheduled procedures that day, and delays in the outpatient surgery suite had led to production pressures as well as unexpected changes in the makeup of the operating room team. Furthermore, the patient only spoke Spanish and no interpreter was available, meaning that the surgeon (who also spoke Spanish) was the only person to communicate directly with the patient; this resulted in no formal time-out being performed. Computer monitors in the operating room had been placed in such a way that viewing them forced nurses to turn away from the patient, limiting their ability to monitor the surgery and perhaps detect the incorrect procedure before it was completed.

Analyzing Errors Using the Systems Approach

The systems approach provides a framework for analysis of errors and efforts to improve safety. There are many specific techniques that can be used to analyze errors, including retrospective methods such as root cause analysis (or the more generic term systems analysis) and prospective methods such as failure modes effect analysis. Three widely used tools for investigating and responding to patient safety events and near misses are discussed in detail in the Strategies and Approaches for Investigating Patient Safety Events primer.

Failure modes effect analysis (FMEA) attempts to prospectively identify error-prone situations, or failure modes, within a specific process of care. FMEA begins with identifying all the steps that must occur for a given process to occur. Once this process mapping is complete, the FMEA then continues by identifying the ways in which each step can go wrong, the probability that each error can be detected, and the consequences or impact of the error not being detected. The estimates of the likelihood of a particular process failure, the chance of detecting such failure, and its impact are combined numerically to produce a criticality index. This criticality index provides a rough quantitative estimate of the magnitude of hazard posed by each step in a high-risk process. Assigning a criticality index to each step allows prioritization of targets for improvement.

For instance, an FMEA analysis of the medication-dispensing process on a general hospital ward might break down all steps from receipt of orders in the central pharmacy to filling automated dispensing machines by pharmacy technicians. Each step in this process would be assigned a probability of failure and an impact score, so that all steps could be ranked according to the product of these two numbers. Steps ranked at the top (i.e., those with the highest criticality indices) would be prioritized for error proofing.

FMEA makes sense as a general approach and has been used in other high-risk industries. However, the reliability of the technique and its utility in health care are not clear. Different teams charged with analyzing the same process may identify different steps in the process, assign different risks to the steps, and consequently prioritize different targets for improvement. Similar concerns have been raised about root cause analysis.

Developing Solutions for Active and Latent Errors

In attempting to prevent active errors, the differentiation between slips and mistakes is crucial, as the solutions to these two types of errors are very different. Reducing the risk of slips requires attention to the designs of protocols, devices, and work environments—using checklists so key steps will not be omitted, implementing forcing functions to minimize workarounds, removing unnecessary variation in the design of key devices, eliminating distractions from areas where work requires intense concentration, and implementing other redesign techniques. Reducing the likelihood of mistakes, on the other hand, typically requires more training or supervision, perhaps accompanied by a change in position if the mistake is made habitually by the same worker, or disciplinary action if it is due to disruptive or unprofessional behavior. Although slips are vastly more common than mistakes, health care has typically responded to all errors as if they were mistakes, resorting to remedial education and/or added layers of supervision. Such an approach may have an impact on the behavior of the individual who committed an error but does nothing to prevent other frontline workers from committing the same error, leaving patients at risk of continued harm unless broader, more systemic, solutions are implemented.

Summary

Addressing latent errors requires a concerted approach to revising how systems of care work, how protocols are designed, and how individuals interact with the system. Specific solutions thus vary widely depending on the type of latent error, the severity of the error, and the availability of resources (financial, time, and personnel) available to address the problem. An appropriate systems approach to improving safety requires paying attention to human factors engineering, including the design of protocols, schedules, and other factors that are routinely addressed in other high-risk industries but are only now being analyzed in medicine. Creating a culture of safety in which reporting of active errors is encouraged, analysis of errors to identify latent causes is standard, and frontline workers are not punished for committing slips, is also essential for finding and fixing systematic flaws in health care systems.