Unintended Consequences of CPOE

Wears RL. Unintended Consequences of CPOE. PSNet [internet]. Rockville (MD): Agency for Healthcare Research and Quality, US Department of Health and Human Services. 2016.

Wears RL. Unintended Consequences of CPOE. PSNet [internet]. Rockville (MD): Agency for Healthcare Research and Quality, US Department of Health and Human Services. 2016.

Case Objectives

- Explain how technology, including computerized provider order entry, can transform, rather than eliminate, hazards.

- Recognize the risks inherent in poor usability.

- List common design flaws in the development of picklists that may lead to errors.

- Describe a possible way to monitor for picklist errors.

- Discuss the difference between work-as-imagined and work-as-done.

The Case

A 60-year-old woman with a history of metastatic colon cancer and known malignant ascites was admitted to the hospital with abdominal pain, nausea, and vomiting. She presented with sepsis and acute kidney injury with a creatinine of 2.0 mg/dL (up from a baseline of 0.8 mg/dL). She underwent paracentesis and was found to have evidence of acute bacterial peritonitis. She was started on broad-spectrum antibiotics.

The cultures from her peritoneal fluid grew multiple bacterial species, raising concerns for bowel perforation. Her creatinine improved but remained elevated at 1.6 mg/dL and the team felt the persistent acute kidney injury was probably from her sepsis. The team decided to order a CT scan of the abdomen and pelvis with oral contrast. After a discussion, they decided to order the scan without intravenous contrast, out of concern for causing worsening renal function (i.e., contrast nephropathy).

The intern caring for the patient was rotating at this hospital for the first time and was new to the computer system; in fact, she had not ordered a CT scan through the system yet. She only vaguely remembered the necessary steps from the online computer module she had completed 5 weeks earlier as part of her orientation to the training program.

When entering the order for the CT scan, there was a long list of options that were labeled in many different ways. She scanned the list and chose the one named "CT Abdomen and Pelvis with contrast," assuming that she'd need to order the oral contrast separately. She placed the CT scan order, along with a separate order for the oral contrast.

Unfortunately, the CT scan that she ordered had, bundled with it, the use of oral contrast and intravenous contrast. The patient therefore received both kinds of contrast during the scan. The next day when they reviewed the images and saw evidence of both kinds of contrast, the team recognized the error. The patient's creatinine worsened over the next few days, rising to a peak of 3.1 mg/dL. The team caring for the patient felt this was consistent with contrast nephropathy.

The patient remained in a hospital for 6 more days for monitoring and adjustment of her other medications. She ultimately was discharged to an inpatient hospice facility.

The Commentary

by Robert L. Wears, MD, PhD

Computerized provider order entry (CPOE) has long been viewed as a "Holy Grail" for patient safety. Because of its envisioned advantages, CPOE implementation was one of the Leapfrog Group's initial set of recommendations in 1999 (1), even before patient safety catapulted into the spotlight following the IOM report, To Err Is Human.

In theory, CPOE offers numerous advantages over traditional paper-based order systems: it eliminates illegible handwriting, provides integration with electronic medical records and decision support systems, and enables faster data and order transmission to pharmacy and radiology.(2) In practice, these advantages have proven elusive, at least on a widespread scale. A robust narrative review found that CPOE with decision support did not prevent adverse drug events.(3) Although CPOE did lead to safety advantages, these benefits have been undermined by new risks and other unintended consequences.(4,5) In this, CPOE has recapitulated well-known experience with the introduction of new technologies in other spheres—some paths to failure are closed off, but new ones open up that may be harder to detect and manage.(6) For example, the introduction of advanced flight management systems in commercial aviation in the 1990s increased the cognitive demands on pilots and led to new forms of failure, including some catastrophic accidents.(7) By 2010, CPOE and other health information technology (IT) problems were concerning enough to be placed on the ECRI Institute's list of top 10 health care hazards.(8)

Had we not been blinded by wishful thinking and technological utopianism, we would have known better. Computer experts have long cautioned that new technology "? is always an intervention into an ongoing world of activity. It alters what is already going on—the everyday practices and concerns of a community of people—and leads to a resettling into new practices," which in turn create new possibilities for both success and failure.(9) In other words, we should have foreseen that the introduction of electronic health records (EHRs) and CPOE would require new and different workflows and practices, inherently creating opportunities for new kinds of failure.

Poor usability—a computer–user interface that contains embedded traps leading users into mistakes—appeared to play a role in this case. Research has shown that poor usability can lead to adverse events in health care. In a study of medication errors facilitated by CPOE, researchers identified 18 fundamental problems in CPOE systems.(5) Subsequently, usability experts traced 12 of the 18 problems to usability design flaws.(10) The problem is that the human mind is exquisitely tailored to make sense of the world; given the slightest clue, off the mind goes, unbidden and free of conscious control, making generally good assumptions about the way things work, what is going on, and appropriate actions.(11) Poorly designed artefacts subvert this incredible faculty by providing few clues or clear paths, or even worse, providing misleading or confusing clues and error traps. These poor interfaces transform old problems into new ones; they eliminate the problem of illegible or confusing handwriting, but replace it with errors associated with picking the wrong item in a drop-down list, which may result in prescribing the wrong drug, dose, or route of a medication; prescribing to the wrong patient; or selecting the wrong diagnostic test as in this case.(12) These types of errors are known as picklist errors.

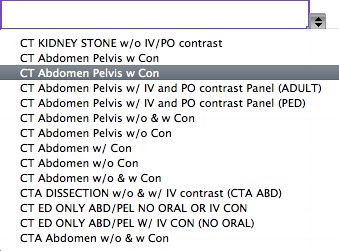

Picklist errors can come from a variety of interface flaws: (i) entries can be too close together, so users accidentally click a row adjacent to the one they intended; (ii) the user and screen can be misaligned leading the user to select an entry other than the one intended (for example, if the user is standing but the screen is at sitting height, the parallax effect can change the apparent position of an object depending on the sight line of the viewer); (iii) choices can be presented in an illogical order; (iv) lists can be so long that users choose a top entry (presuming it is most appropriate) rather than scrolling to the bottom; (v) entries themselves can be long and largely identical with the small amount of differentiating text far to the right or even off-screen that results in selecting the wrong item.(13) Although we aren't given the details in this case, a typical picklist resulting from typing "CT abd" into an order search might be displayed in a box about 3 inches tall containing something like the 14 entries in the Figure, many of which are similar in appearance and might easily be selected by mistake given time pressure, low light, multitasking, noise, alarms, interruptions, and so on. Good picklists use clean typography with good contrast, allow plenty of space between items, take care to distinguish easily confused choices, limit the number of choices to avoid the need to scroll, and enable easy back-tracking from erroneous choices.(14)

Given how common picklist errors are, there are mechanisms for organizations to identify the most common picklist problems. One important clue can come in the form of Order/Cancel pairs or Order/Cancel/Reorder triples. These are instances where a provider places an order, recognizes it was not as desired, retracts it, and then optionally enters a new but closely related order, and they are useful indicators of picklist problems. System administrators can use the frequency of such pairs and triples to identify and correct the most common picklist problems.(15) Such monitoring might have identified this and related problems prior to their being discovered in the context of patient injury, as occurred in this case.

Although poor usability undoubtedly played a role in this case, focusing only on interface issues misses a much more serious and fundamental problem: the model of work inscribed in CPOE systems often clashes strongly with the real world of clinical work; that is, it is "ecologically invalid."(16,17) CPOE systems embrace an idealized model of clinical activity: the provider enters a fully specified order, which is then electronically transmitted to the appropriate department and executed. This might be called work-as-imagined; a logical and rational model that is unfortunately only a poor simulacrum of what actually happens. This simplistic model is typically found in procedure manuals or articulated in committee, task force, and focus group meetings that occur far from the actual work.

The opposite of work-as-imagined is work-as-done; what people actually do to achieve their goals under real-world conditions.(18) For a variety of reasons, in complex environments like health care, there is always a gap between these two visions of work.(19) This gap can be difficult even for the participants themselves to identify and is best elicited by skilled observations of real work in real settings.(20)

If such observations of the ordering process had been performed prior to the design of the CPOE system, they would have shown a more complex process in which a provider enters an order, and then the nurses and unit secretaries "fiddle" with the order to refine it and translate it into the organization's technical language before transmitting it to the appropriate department. In any complex, local work situation, various actors make these adjustments in order to get their work done in the face of local contingencies. For example, given an incompletely specified order, an expert nurse might recognize the issue and know to ask if the physician wanted only abdomen, or abdomen and pelvis; only oral contrast, only intravenous, or both, or none. This "articulation work" that knits the system together is typically hidden (particularly when performed by lower status personnel). It requires local intelligence about the work system and is essential to getting the job done.(21,22) The introduction of barcode medication administration systems has similarly been noted to disrupt existing patterns of physician–nurse–pharmacy articulation across time and space in unexpected ways.(23) By failing to include this invisible articulation work and its situated intelligence in the CPOE design, that work has not been eliminated but rather shifted to another party, in this case a physician who was ill-suited to doing it because she was new in this environment. Even the best user interface cannot compensate for an inherently imperfect model of work hardwired in the system; a more fundamental redesign to bring two models of work into better alignment is required.

Discourses about patient safety tend to involve a good deal of talk about systems thinking, but actually exhibit very little of it. The discussions of the unintended consequences of health IT (4,24-27) illustrate this problem. The literature suggests these side effects are unique and separate from the intended effects and impact of the EHR or CPOE. System science holds a different view about side effects. It holds that there are no side effects; there are only effects. It views side effects not as features of reality but rather signs, in Sterman's words, "that our understanding of the system is narrow and flawed."(28) Improving CPOE and other health IT systems will entail a good deal more than improving the user interface, important as that is. Rather, it will require abandoning the narrow and flawed, rationalized, logical models of care embedded in their designs (29) and replacing them with designs grounded in empirical studies and observations of the real world of clinical work. In other words, the goal should be moving work-as-imagined closer to work-as-done, rather than the opposite.

In this case, it is likely that the user interface and a picklist error contributed to this adverse event. A redesigned drop-down list could reduce the risk of future hazards. Importantly, a more sophisticated understanding of the work-as-done in ordering diagnostic tests might allow for the construction of a more robust CPOE system that would not only reduce the risk of error but improve efficiency and, ideally, patient care and provider satisfaction.

Take-Home Points

- Poorly designed user interfaces contain error traps that can introduce new risks to patients into clinical work systems.

- Usability evaluation should be part of system design, implementation, maintenance, and modification.

- User interfaces should follow well-established ergonomic principles to minimize error traps.

- System administrators should monitor order-retract-reorder instances to identify a class of user-interface problems in CPOE systems before patients are harmed.

- The basis of system design needs to shift from work-as-imagined to work-as-done.

Robert L. Wears, MD, PhD Professor Department of Emergency Medicine University of Florida Jacksonville, FL

Visiting Professor Clinical Safety Research Unit Imperial College London London, UK

Faculty Disclosure: Dr. Wears has declared that neither he, nor any immediate members of his family, have a financial arrangement or other relationship with the manufacturers of any commercial products discussed in this continuing medical education activity. In addition, the commentary does not include information regarding investigational or off-label use of pharmaceutical products or medical devices.

References

1. Leapfrog Group. Leapfrog initiatives to drive great leaps in patient safety.

2. Ranji S, Wachter RM, Hartman EE. Patient Safety Primer: Computerized Provider Order Entry. AHRQ Patient Safety Network. [Available at] Updated July 2016.

3. Ranji SR, Rennke S, Wachter RM. Computerised provider order entry combined with clinical decision support systems to improve medication safety: a narrative review. BMJ Qual Saf. 2014;23:773-780. [go to PubMed]

4. Ash JS, Sittig DF, Poon EG, Guappone K, Campbell E, Dykstra RH. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2007;14:415-423. [go to PubMed]

5. Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293:1197-1203. [go to PubMed]

6. Bainbridge L. Ironies of automation: increasing levels of automation can increase, rather than decrease, the problems of supporting the human operator. In: Rasmussen J, Duncan K, Leplat J, eds. New Technology and Human Error. Chichester, UK: Wiley; 1987: 276-283. ISBN: 9780471910442.

7. Sarter NB, Woods DD. How in the world did we ever get into that mode? Mode error and awareness in supervisory control. Hum Factors. 1995;37:5-19. [Available at]

8. Merrill M. HIT makes ECRI's top 10 list of hazardous technologies for 2011. December 6, 2010. [Available at]

9. Flores F, Graves M, Hartfield B, Winograd T. Computer systems and the design of organizational interactions. ACM Trans Inf Syst. 1988;6:153-172. [Available at]

10. Beuscart-Zéphir M-C, Pelayo S, Borycki E, Kushniruk A. Human factors considerations in health IT design and development. In: Carayon P, ed. Handbook of Human Factors and Ergonomics in Health Care and Patient Safety. 2nd ed. Boca Raton, FL: CRC Press; 2012: 649-670. ISBN: 9780805848854.

11. Norman DA. The Design of Everyday Things. New York: Basic Books; 1988. ISBN: 9780385267748.

12. Green RA, Hripcsak G, Salmasian H, et al. Intercepting wrong-patient orders in a computerized provider order entry system. Ann Emerg Med. 2015;65:679-686.e1. [go to PubMed]

13. Nielsen J. 10 usability heuristics for user interface design. January 1, 1995. [Available at]

14. Schneiderman B, Plaisant C, Cohen MS, Jacobs SM. Designing the User Interface: Strategies for Effective Human–Computer Interaction. 5th ed. Cranbury, NJ: Pearson; 2010. ISBN: 9780321537355.

15. Adelman JS, Kalkut GE, Schechter CB, et al. Understanding and preventing wrong-patient electronic orders: a randomized controlled trial. J Am Med Inform Assoc. 2013;20:305-310. [go to PubMed]

16. Wears RL, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA. 2005;293:1261-1263. [go to PubMed]

17. Berg M. Health Information Management: Integrating Information Technology in Health Care Work. London, UK: Routledge; 2004. ISBN: 9780415315180.

18. Hollnagel E. Safety-I and Safety-II: The Past and Future of Safety Management. Farnham, UK: Ashgate; 2014. ISBN: 9781472423085.

19. Hollnagel E. Why is work-as-imagined different from work-as-done? In: Wears RL, Hollnagel E, Braithwaite J, eds. The Resilience of Everyday Clinical Work. Farnham, UK: Ashgate; 2015: 249-264. ISBN: 9781472437822.

20. Nemeth CP, Cook RI, Woods DD. The messy details: insights from the study of technical work in healthcare. IEEE Trans Syst Man Cybern. 2004;34:689-692. [Available at]

21. Gerson EM, Star SL. Analyzing due process in the workplace. ACM Trans Inf Syst. 1986;4:257-270. [Available at]

22. Suchman L. Making work visible. Commun ACM. 1995;38:56-64. [Available at]

23. Novak LL, Lorenzi NM. Barcode medication administration: supporting transitions in articulation work. AMIA Annu Symp Proc 2008:515-519. [go to PubMed]

24. Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2006;13:547-556. [go to PubMed]

25. Harrison MI, Koppel R, Bar-Lev S. Unintended consequences of information technologies in health care—an interactive sociotechnical analysis. J Am Med Inform Assoc. 2007;14:542-549. [go to PubMed]

26. Weiner JP, Kfuri T, Chan K, Fowles JB. "e-Iatrogenesis": the most critical unintended consequence of CPOE and other HIT. J Am Med Inform Assoc. 2007;14:387-388. [go to PubMed]

27. Strom BL, Schinnar R, Aberra F, et al. Unintended effects of a computerized physician order entry nearly hard-stop alert to prevent a drug interaction: a randomized controlled trial. Arch Intern Med. 2010;170:1578-1583. [go to PubMed]

28. Sterman JD. Business Dynamics: Systems Thinking and Modeling for a Complex World. Boston, MA: Irwin McGraw-Hill; 2000. ISBN: 9780072389159.

29. Berwick DM. Improvement, trust, and the healthcare workforce. Qual Saf Health Care 2003;12:448-452. [go to PubMed]

Figure

Example Picklist Resulting From Typing "CT abd" Into an Order Search*

*It's not the publisher's mistake—one of these entries is a duplicate on this screen.