Root Cause Analysis Gone Wrong

Peerally MF, Dixon-Woods M. Root Cause Analysis Gone Wrong. PSNet [internet]. Rockville (MD): Agency for Healthcare Research and Quality, US Department of Health and Human Services. 2018.

Peerally MF, Dixon-Woods M. Root Cause Analysis Gone Wrong. PSNet [internet]. Rockville (MD): Agency for Healthcare Research and Quality, US Department of Health and Human Services. 2018.

The Case

A 42-year-old man with history of end-stage renal disease on hemodialysis was awaiting a kidney transplant. A suitable donor was identified, and the patient was taken to the operating room for the procedure. The surgery was uneventful, and the transplanted kidney was connected successfully. As the procedure was drawing to a close, the surgeon instructed the anesthesiologist to give 3000 units of intravenous heparin (an anticoagulant) as part of the standard protocol to prevent graft thrombosis.

The surgical team was preparing to close the incision when the clinicians noticed blood in the surgical field. They performed a careful search for bleeding, but did not find a clear source, and the bleeding continued to worsen. The patient's blood pressure began to drop, and transfusions were administered while the team tried to stop the bleeding. At that point, the anesthesiologist reviewed the medications and realized that he had accidentally administered 30,000 units of heparin—not 3000 units. He immediately administered protamine to reverse the anticoagulant effect. However, the persistent bleeding and hypotension had irreversibly damaged the transplanted kidney. The kidney was explanted, and the patient was transferred to the intensive care unit in critical condition. The error was disclosed to the patient and his family, and he eventually recovered and was discharged home. He continued to receive hemodialysis while awaiting another transplant.

The hospital planned to perform a root cause analysis (RCA) to investigate the adverse event. The transplant surgeon was furious about the error and complained angrily to the operating room staff, chief of anesthesiology, and chief medical officer (CMO) about the "incompetence" that had resulted in the error. Although the hospital's patient safety officer attempted to conduct the RCA in a nonjudgmental fashion, she found it very difficult to focus the investigation on possible systems issues, as it seemed that all the personnel involved had already decided the anesthesiologist was to blame. The transplant surgeon was influential at the hospital, and under pressure from the CMO, the chief of anesthesiology decided to dismiss the anesthesiologist. The RCA was completed, but the conclusions focused only on the individual anesthesiologist, and no interventions to prevent similar errors or address systems issues were ever implemented.

The Commentary

Commentary by Mohammad Farhad Peerally, MBChB, MRCP, and Mary Dixon-Woods, DPhil

Root cause analysis (RCA) is a widely used method deployed following adverse events in health care.(1) Using a range of information-gathering and analytical tools (such as interviews, the "five whys" technique, fishbone diagrams, change analysis, and others), RCA seeks to understand what happened and why and to identify how to prevent future incidents. The practice of RCA for sentinel events (e.g., incidents resulting in death, permanent harm, or severe temporary harm) has been mandated by The Joint Commission in the United States for more than 15 years (2) and is widely adopted in many other high-income nations.(3) Despite its ubiquity, whether RCA improves patient safety remains unclear.(4,5) For example, a recent longitudinal mixed-methods study investigating 302 RCAs over an 8-year period showed that similar adverse events (such as retained foreign objects during surgery) recurred after RCA recommendations were implemented.(6)

Many of the challenges associated with RCA in health care have been previously described.(4) The case above illustrates some of those associated with migrating practices from other safety critical industries (7) to health care without adequate fidelity to the underlying principles or customization for the health care context. Striking problems in this case include compromises of objectivity, deference to hierarchy, and lack of expertise in safety science on the part of the investigation team.

These problems happen in part because in health care, in contrast with industries such as aviation, those conducting RCA generally work in the place where the incident occurred.(4) The result is that some voices, perhaps those of senior physicians or other powerful individuals, may dominate.(8) Small local teams often also lack specialized skills and expertise. For example, expertise in human factors engineering, needed to achieve deep understanding of an incident and to identify, select, and test solutions.(9) Thus, poorly done RCAs may perversely promote a blame culture and frustrate organizational learning. As this case illustrates, no true learning may occur and the problem investigated may be just as likely to recur as before the RCA. Some organizations now invite peer reviewers from outside the department or organization where the incident happened to provide a fresh perspective on the outcome of investigations and suggested action plans.(10) Nonetheless, the quality of investigation clearly still needs to be assured.

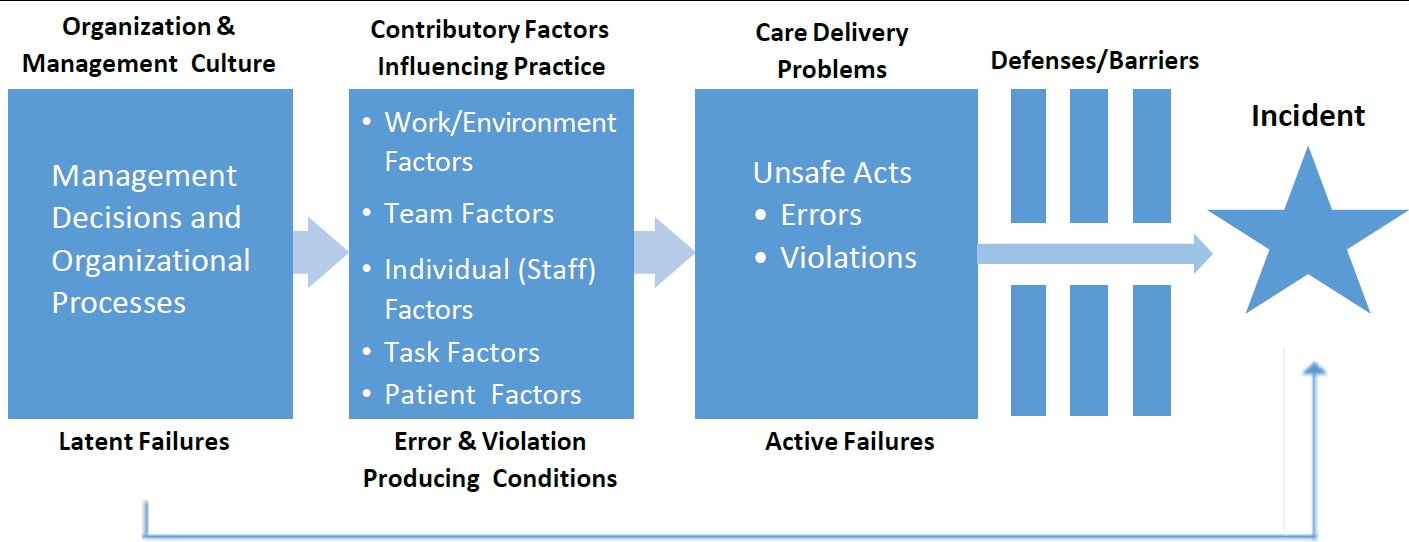

Use of standardized evidence-based frameworks may have an important role in improving investigations. Examples include the Yorkshire Contributory Factors Framework (11) and the London Protocol, which is based on the Organizational Accident Causation Model (Figure).(12) Such frameworks facilitate consideration of how latent conditions (in this case, poor design) may influence failures, instead of solely focusing on active failures (in this case, the anesthesiologist's unfortunate drawing up of the wrong dose).

Health care should also recognize that RCA need not be synonymous with incident investigation. To investigate incidents, RCA is just one of a number of methods available. Newer paradigms of accident causation and analysis draw on systems theory. The systems approach assumes that properties of a complex organization (or system) can only be appreciated when viewing the system as a whole, as opposed to viewing each subsystem separately. Each component of a bigger system maintains its current state through feedback loops or constraints. Adverse events can then be seen as arising from inadequate control of these constraints between the different subsystems (people, social, organizational structures, automated activities, hardware, and software).(13) Examples of approaches based on systems theory include the systems-theoretic accident model and processes (14) and AcciMap methods.(15) A short animation of what a system-based health care incident investigation looks like is available.(16)

Perhaps the most important challenge for incident investigation, however, concerns the phase that is often the one most poorly done: recommendations for remedial or corrective action.(4,17) Again, widely recommended approaches from other industries—such as the hierarchy of risk controls (18)—have never been properly evaluated in a health care context.(19) A potentially more useful approach may involve phenotyping incidents and recommending actions based on a sound theory of change.(20) This approach would entail a deep understanding of the relationship between proposed actions and causal factors identified through the investigation and the mechanisms through which the proposed actions would lead to the ultimate goal (elimination of 10 times drug errors in this case).(21)

Another important consideration is choosing the right level of the system at which to intervene.(22) The local practice of RCA has tended to favor the development of local solutions, when many of the challenges—as in this case—would benefit from a standardized approach across the whole health system, so that people learn once rather than having to continually attend to local custom. In the case above, for example, it is possible that a technological solution might be found to the wrong-dose problem, but it is unlikely to be found by a single local team. Further, if such a solution does emerge, it needs to be implemented across the health system to avoid the risks of some hospitals continuing to rely on individual vigilance. This means that health care increasingly needs to solve the problem of many hands (23), where too little safety improvement action is coordinated at a supraorganizational level.

Once a risk control or other safety solution is introduced, health care organizations need to monitor implementation and effectiveness.(4) To this end, the Commercial Aviation Safety Team method (24), which uses process measures to monitor both implementation and impact of recommendations, has been adopted in some hospitals and, though it requires further evaluation in the health care setting, offers some promise.(25) Another innovative approach is the use of immersive simulation exercises based on real adverse events (26) to promote participative and experiential learning and a culture of active reflection.

Some developments in the United Kingdom are also encouraging. An independent Healthcare Safety Investigation Branch (HSIB) has been established with the aim of conducting thematic analysis of similar incidents across multiple organizations in order to make safety recommendations and produce learning. Among other things, HSIB will look to build a skilled workforce combining health care risk managers, clinicians, and expert investigators from other safety critical industries.(27) If this model proves successful, it may be a useful one for other countries.

Incident investigation must be more than a simple procedural ritual in the aftermath of a patient safety event, and the temptation to appropriate it as a blame-engineering exercise must be resisted. Its purpose should remain relentlessly focused on how to make improvements, and how to make them in the right way.

Take-Home Points

- Incident investigation following an adverse event requires an organizational safety culture that fosters open dialogue surrounding medical errors to maximize organizational learning.

- Root cause analysis is only one of many methods of incident investigation. Newer methods promote a systemic view of incident investigations and analysis.

- Incident investigations in health care need to be conducted by professionals skilled in human factors engineering and systems thinking, alongside expert clinicians and frontline staff.

- The recommendation phase should receive more attention. Corrective actions need to be congruent to the causal factors they are trying to address and monitored for successful implementation and risk mitigation.

- Some problems identified through root cause analysis cannot be solved by individual organizations but require coordinated efforts across the entire health care system.

Mohammad Farhad Peerally, MBChB, MRCP

Clinical Research Fellow

SAPPHIRE

Department of Health Sciences

University of Leicester

Leicester, UK

Mary Dixon-Woods, DPhil

RAND Professor of Health Services Research and Wellcome Trust Investigator

Director, The Healthcare Improvement Studies Institute

Co-director, Cambridge Centre for Health Services Research

Cambridge, UK

References

1. Bagian JP, Gosbee J, Lee CZ, Williams L, McKnight SD, Mannos DM. The Veterans Affairs root cause analysis system in action. Jt Comm J Qual Improv. 2002;28:531-545. [go to PubMed]

2. Sentinel Events. In: Comprehensive Accreditation Manual for Hospitals. CAMH Update 1. Oakbrook Terrace, IL: Joint Commission; July 2017:SE1-SE20.

3. Key Findings and Recommendations. Education and Training in Patient Safety Across Europe. Brussels, Belgium: Education and Training in Patient Safety Subgoup of the Patient Safety and Quality of Care Working Group of the European Commission; 2014. [Available at]

4. Peerally MF, Carr S, Waring J, Dixon-Woods M. The problem with root cause analysis. BMJ Qual Saf. 2017;26:417-422. [go to PubMed]

5. Percarpio KB, Watts BV, Weeks WB. The effectiveness of root cause analysis: what does the literature tell us? Jt Comm J Qual Patient Saf. 2008;34:391-398. [go to PubMed]

6. Kellogg KM, Hettinger Z, Shah M, et al. Our current approach to root cause analysis: is it contributing to our failure to improve patient safety? BMJ Qual Saf. 2017;26:381-387. [go to PubMed]

7. Macrae C. Close Calls: Managing Risk and Resilience in Airline Flight Safety. London, United Kingdom: Palgrave Macmillan; 2014. ISBN: 9781349306329.

8. Nicolini D, Waring J, Mengis J. Policy and practice in the use of root cause analysis to investigate clinical adverse events: mind the gap. Soc Sci Med. 2011;73:217-225. [go to PubMed]

9. Investigating Clinical Incidents in the NHS. Sixth Report of Session 2014–15. House of Commons Public Administration Select Committee. London, England: The Stationery Office; March 27, 2015. Publication HC 886. [Available at]

10. Carthey J. Implementing Human Factors in Healthcare: Taking Further Steps. UK: Clinical Human Factors Group; July 2013. [Available at]

11. Lawton R, McEachan RR, Giles SJ, Sirriyeh R, Watt IS, Wright J. Development of an evidence-based framework of factors contributing to patient safety incidents in hospital settings: a systematic review. BMJ Qual Saf. 2012;21:369-380. [go to PubMed]

12. Taylor-Adams S, Vincent C. Systems analysis of clinical incidents: the London Protocol. Clin Risk. 2004;10:211-220. [Available at]

13. Qureshi ZH. A review of accident modelling approaches for complex socio-technical systems. Proceedings of the 12th Australian Workshop on Safety Critical Systems and Software and Safety-Related Programmable Systems. 2007;86:47-59.

14. Leveson N, Samost A, Dekker S, Finkelstein S, Raman J. A systems approach to analyzing and preventing hospital adverse events. J Patient Saf. 2016 Jan 11; [Epub ahead of print]. [Available at]

15. Rasmussen J. Risk management in a dynamic society: a modelling problem. Saf Sci. 1997;27:183-213. [Available at]

16. Jun T, Waterson P. Systems Thinking—A New Direction in Healthcare Incident Investigation. UK: Clinical Human Factors Group; July 2013. [Available at]

17. Card AJ, Ward J, Clarkson PJ. Successful risk assessment may not always lead to successful risk control: a systematic literature review of risk control after root cause analysis. J Healthc Risk Manag. 2012;31:6-12. [go to PubMed]

18. RCA2: Improving Root Cause Analyses and Actions to Prevent Harm. Boston, MA: National Patient Safety Foundation; 2015. [Available at]

19. Liberati EG, Peerally MF, Dixon-Woods M. Learning from high risk industries may not be straightforward: a qualitative study of the hierarchy of risk controls approach in healthcare. Int J Qual Health Care. 2018;30:39-43. [go to PubMed]

20. Davidoff F, Dixon-Woods M, Leviton L, Michie S. Demystifying theory and its use in improvement. BMJ Qual Saf. 2015;24:228-238. [go to PubMed]

21. Dixon-Woods M, Bosk CL, Aveling EL, Goeschel CA, Pronovost PJ. Explaining Michigan: developing an ex post theory of a quality improvement program. Milbank Q. 2011;89:167-205. [go to PubMed]

22. Dixon-Woods M, Martin GP. Does quality improvement improve quality? Future Hosp J. 2016;3:191-194. [Available at]

23. Dixon-Woods M, Pronovost PJ. Patient safety and the problem of many hands. BMJ Qual Saf. 2016;25:485-488. [go to PubMed]

24. Commercial Aviation Safety Team. Fact Sheet; 2011.

25. Pham JC, Kim GR, Natterman JP, et al. ReCASTing the RCA: an improved model for performing root cause analyses. Am J Med Qual. 2010;25:186-191. [go to PubMed]

26. Peerally MF, Fores M, Powell R, Durbridge M, Carr S. 0192 Implementing Themes From Serious Incidents Into Simulation Training For Junior Doctors. BMJ Simul Technol Enhanc Learn. 2014;1:A22. [Available at]

27. Healthcare Safety Investigation Branch. [Available at]

Figure

Adapted Organizational Accident Causation Model.(12)